If you’re reading this, you’re probably trying to counter disinformation.

Good news!

Good news!

You’re not alone.

To make it easier to share and collaborate with others who share your aim, you first have to determine the criteria that guide your action.

To make it easier to share and collaborate with others who share your aim, you first have to determine the criteria that guide your action.

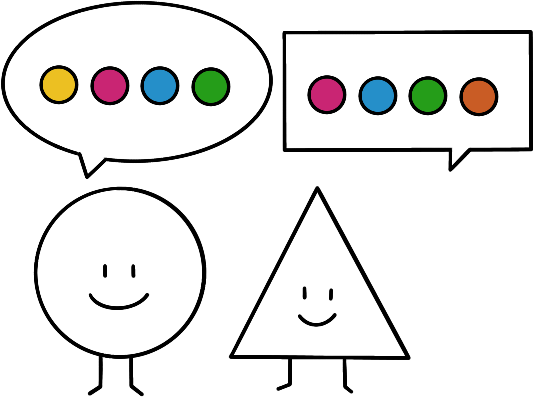

There is no unique definition for information manipulation and what kind warrants a reaction. Each organization has its own mandate and along with it, specific criteria to distinguish “legit” information from disinformation.

For instance, a Foreign Affairs Ministry will deem crucial the criterion of “foreign source”. Or, a europhile association will only target eurosceptic content, making europhobia a decisive criteria, whereas a nationalist group will focus on fact-checking EU-supportive content.

For instance, a Foreign Affairs Ministry will deem crucial the criterion of “foreign source”. Or, a europhile association will only target eurosceptic content, making europhobia a decisive criteria, whereas a nationalist group will focus on fact-checking EU-supportive content.

Spelling out your criteria allows you to mutualise means with other actors while preserving their mandate… and your own, by making it explicit!

Spelling out your criteria allows you to mutualise means with other actors while preserving their mandate… and your own, by making it explicit!

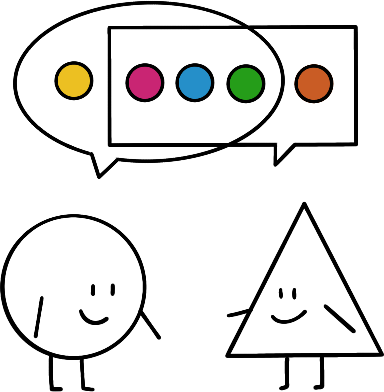

The intersection of each actor’s criteria defines what disinformation is to them.

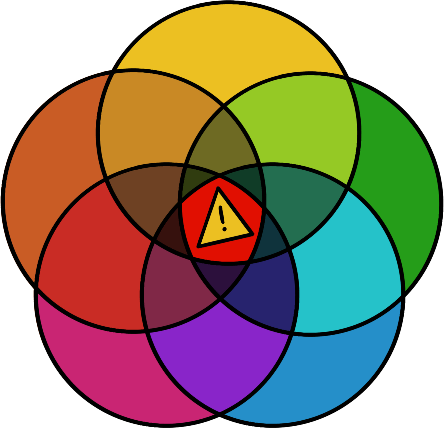

Now that we know how to differentiate legitimate content from manipulated information, let’s clarify the process through which actors go to counter it.

Detection

First, you need to detect suspicious content on the Internet.

This can be done with human intelligence, or more likely with software that scans through specific sources to highlight suspicious content. At this stage, what you want is only to identify which content deserves investing more resources.

This can be done with human intelligence, or more likely with software that scans through specific sources to highlight suspicious content. At this stage, what you want is only to identify which content deserves investing more resources.

Qualification

Indeed, human intervention is necessary to qualify content according to your criteria.

You need to strike a balance between obtaining enough certainty that a possible reaction is appropriate, and being fast enough for it to be impactful.

Very often, what was detected as suspicious does not qualify as actually harmful, hence the importance of this phase.

You need to strike a balance between obtaining enough certainty that a possible reaction is appropriate, and being fast enough for it to be impactful.

Very often, what was detected as suspicious does not qualify as actually harmful, hence the importance of this phase.

Reaction

For these pieces of content that actually qualify as disinformation, your organisation will have some reaction mechanism to activate.

It might be issuing a public rebuttal, a takedown notice, exposing the mischief, activating elves networks… or simply doing nothing because the risk of backfire always exists!

It might be issuing a public rebuttal, a takedown notice, exposing the mischief, activating elves networks… or simply doing nothing because the risk of backfire always exists!

Attribution

Another resource consuming activity is attributing the origin of disinformation to specific agents.

While useful to inform the best course of action, this phase is not needed to react. Besides, attribution does not share the urgency that an impactful reaction depends on. Related practices and tools are part of the forensics and open-source intelligence (OSINT) fields. Attribution brings the most value by linking together seemingly independent campaigns, allowing to refine detection and reaction means through the unveiling of patterns.

While useful to inform the best course of action, this phase is not needed to react. Besides, attribution does not share the urgency that an impactful reaction depends on. Related practices and tools are part of the forensics and open-source intelligence (OSINT) fields. Attribution brings the most value by linking together seemingly independent campaigns, allowing to refine detection and reaction means through the unveiling of patterns.

Research

Going beyond specific campaigns, research adds to the knowledge of information manipulation.

Studying past disinformation efforts allows to more easily identify and assess future ones. It can also be used to evaluate the effectiveness of tools and practices that counter them. Information manipulation has no dedicated academic field, most of the current research on the topic happens at the intersection of social and computer sciences.

Studying past disinformation efforts allows to more easily identify and assess future ones. It can also be used to evaluate the effectiveness of tools and practices that counter them. Information manipulation has no dedicated academic field, most of the current research on the topic happens at the intersection of social and computer sciences.

Prevention

Prevention raises awareness and increases media literacy and societal cohesion.

Disinformation targets human societies. While research can help us understand how attacks work, and the detection-qualification-reaction sequence can defuse many of them, the most viable long-term solution is to immunize the entire social body.

Disinformation targets human societies. While research can help us understand how attacks work, and the detection-qualification-reaction sequence can defuse many of them, the most viable long-term solution is to immunize the entire social body.

Foster collaboration and increase impact

This collaborative resource aims at empowering all actors countering information manipulation to grow and improve.

To do so, it unifies vocabulary and provides concepts like the ones you just read. It also gathers exemplary, sharable case studies and describes the best practices, tools and actors in the field. Furthermore, it consolidates opportunities such as funding.

Your contributions are more than welcome.